Measure what is measurable, and make measurable what is not so.

—Galileo Galilei

Researchers Tara MacDonald and Alanna Martineau were interested in the effect of female university students’ moods on their intentions to have unprotected sexual intercourse [MM02]. In a carefully designed empirical study, they found that being in a negative mood increased intentions to have unprotected sex - but only for students who were low in self-esteem. Although there are many challenges involved in conducting a study like this, one of the primary ones is the measurement of the relevant variables. In this study, the researchers needed to know whether each of their participants had high or low self-esteem, which of course required measuring their self-esteem. They also needed to be sure that their attempt to put people into a negative mood (by having them think negative thoughts) was successful, which required measuring their moods. Finally, they needed to see whether self-esteem and mood were related to participants’ intentions to have unprotected sexual intercourse, which required measuring these intentions.

To students who are just getting started in psychological research, the challenge of measuring such variables might seem insurmountable. Is it really possible to measure things as intangible as self-esteem, mood, or an intention to do something? The answer is a resounding yes, and in this chapter we look closely at the nature of the variables that psychologists study and how they can be measured. We also look at some practical issues in psychological measurement.

The Rosenberg Self-Esteem Scale [Ros65] is one of the most common measures of self-esteem and the one that MacDonald and Martineau used in their study. Participants respond to each of the 10 items (below) using a 4-point rating scale: Strongly Agree, Agree, Disagree, Strongly Disagree.

Items 1, 2, 4, 6, and 7 are scored by assigning 3 points for each Strongly Agree response, 2 for each Agree, 1 for each Disagree, and 0 for each Strongly Disagree. The scoring for Items 3, 5, 8, 9, and 10 are reversed, with 0 points assigned for each Strongly Agree, 1 point for each Agree, and so on. The overall score is the total number of points.

Measurement is the assignment of scores to individuals (or families, or schools, or neighborhoods, etc.) so that the scores represent some characteristic of the individuals (or families, etc.). This very general definition is consistent with the kinds of measurement that everyone is familiar with - for example, weighing oneself by stepping onto a bathroom scale, or checking the internal temperature of a roasting turkey by inserting a meat thermometer. It is also consistent with measurement in the other sciences. In physics, for example, one might measure the potential energy of an object in Earth’s gravitational field by finding its mass and height (which of course requires measuring those variables) and then multiplying them together along with the gravitational acceleration of Earth (9.8 m/s2). The result of this procedure is a score that represents the object’s potential energy.

This general definition of measurement is consistent with measurement in psychology too (psychological measurement is often referred to as psychometrics). Imagine, for example, that a cognitive psychologist wants to measure a person’s working memory capacity - his or her ability to hold in mind and think about several pieces of information all at the same time. To do this, she might use a backward digit span task, in which she reads a list of two digits to the person and asks him or her to repeat them in reverse order. She then repeats this several times, increasing the length of the list by one digit each time, until the person makes an error. The length of the longest list for which the person responds correctly is the score and represents his or her working memory capacity. Or imagine a clinical psychologist who is interested in how depressed a person is. He administers the Beck Depression Inventory, which is a 21-item self-report questionnaire in which the person rates the extent to which he or she has felt sad, lost energy, and experienced other symptoms of depression over the past 2 weeks. The sum of these 21 ratings is the score and represents his or her current level of depression.

The important point here is that measurement does not require any particular instruments or procedures. It does not require placing individuals or objects on bathroom scales, holding rulers up to them, or inserting thermometers into them. What it does require is some systematic procedure for assigning scores to individuals or objects so that those scores represent the characteristic of interest.

Many variables studied by psychologists are straightforward and simple to measure. These include age, height, weight, and birth order. You can ask people how old they are and be reasonably sure that they know and will tell you. Although people might not know or want to tell you how much they weigh, you can have them step onto a bathroom scale. Other variables studied by psychologists - perhaps the majority - are not so straightforward or simple to measure. We cannot accurately assess people’s level of intelligence by looking at them, and we certainly cannot put their self-esteem on a bathroom scale. These kinds of variables are called constructs (pronounced CON-structs) and include personality traits (e.g., extroversion), emotional states (e.g., fear), attitudes (e.g., toward taxes), and abilities (e.g., athleticism).

Psychological constructs cannot be observed directly. One reason is that they often represent tendencies to think, feel, or act in certain ways. For example, to say that a particular university student is highly extroverted does not necessarily mean that she is behaving in an extroverted way right now. In fact, she might be sitting quietly by herself, reading a book. Instead, it means that she has a general tendency to behave in extroverted ways (talking, laughing, etc.) across a variety of situations. Another reason psychological constructs cannot be observed directly is that they often involve internal processes. Fear, for example, involves the activation of certain central and peripheral nervous system structures, along with certain kinds of thoughts, feelings, and behaviors - none of which is necessarily obvious to an outside observer. Notice also that neither extroversion nor fear “reduces to” any particular thought, feeling, act, or physiological structure or process. Instead, each is a kind of summary of a complex set of behaviors and internal processes.

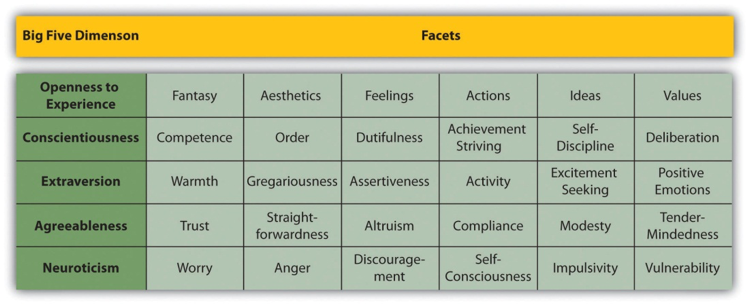

Fig. 3.1 The Big Five is a set of five broad dimensions that capture much of the variation in human personality. Each of the Big Five can even be defined in terms of six more specific constructs called “facets” [CM92]. ¶

The conceptual definition of a psychological construct describes the behaviors and internal processes that make up that construct, along with how that construct relates to other constructs and variables. For example, a conceptual definition of neuroticism (another one of the Big Five) would be that it is made up of people’s tendency to experience negative emotions such as anxiety, anger, and sadness across a variety of situations. This definition might also specify that neuroticism has a strong genetic component, remains fairly stable over time, and is positively correlated with the tendency to experience pain and other physical symptoms.

Students sometimes wonder why, when researchers want to understand a construct like self-esteem or neuroticism, they do not simply look it up in the dictionary. One reason is that many scientific constructs do not have counterparts in everyday language (e.g., working memory capacity). More important, researchers are in the business of developing definitions that are more detailed and precisem and that more accurately describe the way the world is, than the informal definitions in the dictionary. As we will see, researchers do this by proposing conceptual definitions, testing them empirically, and revising them as necessary. Sometimes they throw them out altogether. This is why the research literature often includes different conceptual definitions of the same construct. In some cases, an older conceptual definition has been replaced by a newer one that fits and works better. In others, researchers are still in the process of deciding which of various conceptual definitions is the best.

An operational definition is a definition of a variable in terms of precisely how it is to be measured. These measures generally fall into one of three broad categories. Self-report measures are those in which participants report on their own thoughts, feelings, and actions, as with the Rosenberg Self-Esteem Scale. Behavioral measures are those in which some other aspect of participants’ behavior is observed and recorded. This is an extremely broad category that includes the observation of people’s behavior both in highly structured laboratory tasks and in more natural settings. A good example of the former would be measuring working memory capacity using the backward digit span task. A good example of the latter is a famous operational definition of physical aggression from researcher Albert Bandura and his colleagues [BRR06]. They let each of several children play for 20 minutes in a room that contained a clown-shaped punching bag called a Bobo doll. They filmed each child and counted the number of acts of physical aggression he or she committed. These included hitting the doll with a mallet, punching it, and kicking it. Their operational definition, then, was the number of these specifically defined acts that the child committed during the 20-minute period. Finally, physiological measures are those that involve recording any of a wide variety of physiological processes, including heart rate and blood pressure, galvanic skin response, hormone levels, and electrical activity and blood flow in the brain.

For any given variable or construct, there can be multiple operational definitions. Stress is a good example. A rough conceptual definition is that stress is an adaptive response to a perceived danger or threat that involves physiological, cognitive, affective, and behavioral components. But researchers have operationally defined it in several different ways. The Social Readjustment Rating Scale is a self-report questionnaire that asks people to identify stressful events that they have experienced in the past year and assigns points for each one depending on severity. For example, a man who has been divorced (73 points), changed jobs (36 points), and had a change in sleeping habits (16 points) in the past year would have a total score of 125. The Daily Hassles and Uplifts Scale is similar but focuses on everyday stressors like misplacing things and being concerned about one’s weight. The Perceived Stress Scale is another self-report measure that focuses on people’s feelings of stress (e.g., ‘How often have you felt nervous and stressed?”). Researchers have also operationally defined stress in terms of several physiological variables including blood pressure and levels of the stress hormone cortisol.

When psychologists use multiple operational definitions of the same construct, either within a study or across studies, they are using converging operations. The idea is that the various operational definitions are “converging” or coming together on the same construct. When scores based on several different operational definitions are closely related to each other and produce similar patterns of results, this constitutes good evidence that the construct is being measured effectively and that it is useful. The various measures of stress, for example, are all correlated with each other and have all been shown to be correlated with other variables such as immune system functioning (also measured in a variety of ways) [SM04]. This is what allows researchers eventually to draw useful general conclusions, such as “stress is negatively correlated with immune system functioning”, as opposed to more specific and less useful ones, such as “people’s scores on the Perceived Stress Scale are negatively correlated with their white blood counts”.

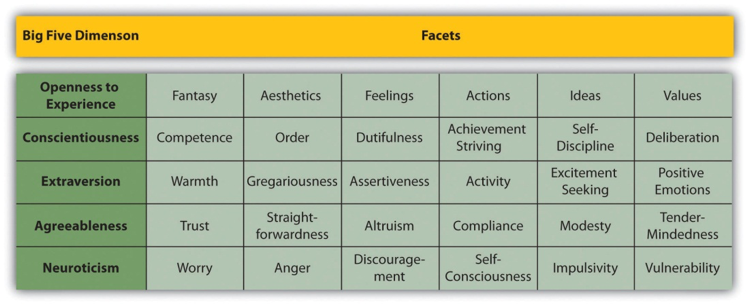

The psychologist S. S. Stevens suggested that scores can be assigned to individuals in a way that communicates more or less quantitative information about the variable of interest [Ste46]. For example, the officials at a 100 meter race could simply rank the runners by the order in which they crossed the finish line (first, second, etc.), or they could time each runner to the nearest tenth of a second using a stopwatch (11.5 s, 12.1 s, etc.). In either case, the officials would be measuring the runners’ performances by systematically assigning scores to represent those performances. But while the rank ordering procedure communicates the fact that the second-place runner took longer to finish than the first-place finisher, the stopwatch procedure also communicates how much longer the second-place finisher took. Stevens actually suggested four different levels of measurement (which he called “scales of measurement”) that correspond to four different levels of quantitative information that can be communicated by a set of scores.

Nominal scale measurements are used for categorical variables and involve assigning “scores” that are labels. Category labels communicate whether any two individuals are the same or different in terms of the variable being measured. For example, you might ask your research participants whether they prefer coffee or tea and enter this this information into a spreadsheet. Or you might ask people what their favorite type of pet is (e.g., dog, cat, bird, hamster, lizard, etc.). In these cases, you have measured nominal-level variables. The essential point about nominal scales is that they permit you to categorize, or group, measurements together so that measurements in the same category are the same and measurements in different categories are different. For example, once you measure your participants’ favorite pet types, you can group all the “cat people” together into one category, group all the “hamster people” together into a second category, etc. All the participants in the “cat person” category are similar on the pet preference variable because they all prefer cats. Those in the “cat person” category and from those in “hamster person” category differ on the pet preference variable. That’s all that nominal-level measurements permit: placing measurements into categories. Nominal scales thus embody the “lowest”, simplest level of measurement.

Like nominal scale measurements, ordinal scale measurements also involve assigning scores so that measurements can be placed into categories. However, ordinal scale measurements also represent the order or rank of the categories. For example, a researcher wishing to measure students’ health-related behavior might ask students to specify how many times in the past month they went to the campus gym, providing four possible reponses: “not at all”, “1-3 times, “4-10 times”, and “more than 10 times”. As with nominal scale measurements, responses to this question can be placed into categories such that all the “not at all” respondants are in one category, all the “1-3 times” respondants are in another category, etc. However, ordinal scale measurements permit us to order these categories. This is what distinguishes ordinal scale measurements from nominal scale measurements. For example, we can say that each respondant in “not at all” category use the campus gym less than the each of the respondants in the “1-3 times” category. The ability to order the categories means that ordinal scale measurements communicate not only whether any two individuals are the same or different in terms of the variable being measured but also whether one measurement is higher/lower, more/less, above/below, etc. on that variable.

On the other hand, ordinal scale measurements fail to capture important information that you might be interested in. In particular, any three ordinal scale measurements can be placed into a sensible order, but we cannot say much about the difference between any of these measurements. For example, imagine we have responses from our gym use question. One is “1-3 times”, one is “4-10 times”, and one is “more than 10 times”. The ordinal scale of our measurement means that we can sensibly claim that the “4-10 times” student should be placed in between the other two student in terms of frequency of gym use: this student used the gym less than the “more than 10 times” respondent, but more than the “1-3 times” student. What about the difference between these students? How much more did the “more than 10 times” student use the gym than the “4-10 times” student? Is the difference between these two students’ gym usage the same as the difference between the “1-3 times” student and the “4-10 times” student? Ordinal scale measurements do not allow us to answer these questions.

Like oridnal scale measurements, interval scale measurements involve assigning scores so that measurements can be placed into categories that can be ordered. However, interval scale measurements also create categories that allow the intervals or differences between categories to be interpreted. As an example, consider either the Fahrenheit or Celsius temperature scales. The difference between 30 degrees and 40 degrees is equivalent to the difference between 80 degrees and 90 degrees. Similarly, the difference between 37 degrees and 39 degrees is equivalent to the difference between -20 degrees and -20 degrees. This is because the categories are placed into their correct ordering, the spacing between categories is meaningful. For tempurature, each 1-degree interval (e.g., 75 degrees vs. 76 degrees) has the same physical meaning (in terms of the kinetic energy of molecules).

Being able to interpret the intervals between measurements allow us to sesibly perform addition and subtraction. For example, if we are dealing with measurements of tempurature, we can confidently state that \(76-75 = 1\) degree and that \(34-33 = 1\) degree and \(76-75 = 34-33\) . We can also state that \(75+5 = 85-5 = 80\) degrees.

Like interval scale measurements, ratio scale measurements also involve assigning scores so that measurements can be placed into categories that can be ordered whose intervals, and thus differences, are interpretable. However, ratio scale measurements also permit researchers to sensibly talk about the ratio betwen measurements. Height measured in centimeters is a good example of a ratio scale measurement. If we measured the height of two research participants, finding that one was 100 centimeters tall and the other was 200 centimeters tall it would be reasonble to say that the second participant is twice as tall as the first. Other examples of ratio scale measurements are counts of discrete objects or events such as the number of siblings one has or the number of questions a student answers correctly on an exam. Like a nominal scale measurement, ratio scale measurements provide a category for each measurement (with ratio scales, the numbers serve as category labels). Like an ordinal scale, the measurements can be put into a sensible order (e.g., 2, 3, 4). Like an interval scale, differences (e.g., 2 vs. 3 and 3 vs. 4) can be interpreted because the differences (or intervals) have the same meaning everywhere on the scale. However, in addition, the same ratio at two places on the scale also carries the same meaning (see Figure 10.3 ).

Another way to think about ratio scale measurements is that they involves assigning scores in such a way that there is a true zero point that represents the complete absence of the quantity that is being measured. It turns out that if a score of zero indicates the absence then ratios of measurements are sensible and vice versa! Zero on the Kelvin scale is absolute zero at which point all molecular motion ceases and, thus, there is no lower tempurature possible. This means that zero degrees Kelvin is a true zero and the Kelvin scale a ratio scale. Thus, we can sensibly say that a gas that is 200 degrees Kelvin has twice the kinetic energy of a gas that is 100 degrees Kelvin.

All frequencies, proportions, and percentages are ratio scale measurements. Imagine we measure the amount of money you have in your pocket right now (25 cents, 50 cents, etc.). Money is measured on a ratio scale because, in addition to having the properties of an interval scale, it has a true zero point: if you have zero money, this actually implies the absence of money. Furthermore, it clearly makes sense to say that someone with 50 cents has twice as much money as someone with only 25 cents.

One difficulty in trying to figure out whether a measurement is on a ratio scale is that many interval scale measurements can produce scores of zero that do not indicate the absence of the quantity being measured. For example, the Fahrenheit scale temperature has a zero point, but zero degrees Fahrenheit is completely arbitrary in the same way that 17 degrees Fahrenheit and -23 degrees Fahrenhei are. Therefore, degrees Fahrenheit is not a ratio scale measurement. What about degrees Celcius? The Celcius scale has a non-arbitrary zero - zero degrees Celcius indicates the tempurate at which water freezes. However, zero degrees Celcius does not indicate the absence of tempurature and is thus not a ratio scale measurement. For this reason, it is often easier to ask about whether ratios of scores make sense instead of asking about whether a measurement has a true zero. For example, it makes no sense to say that 40 degrees Fahrenheit is twice as hot as 20 degrees Fahrenheit is (or twice as much heat, etc.). The same is true of degrees Celcius. Thus, neither Fahrenheit nor Celcius are ratio scale measurements.

Fig. 3.2 Summary of levels of measurement ¶

Stevens’s levels of measurement are important for at least two reasons. First, they emphasize the generality of the concept of measurement. Although people do not normally think of categorizing or ranking as measurement, that’s all measurement really is. Second, the scales of measurement inform the statistical procedures that can be used with the data and the conclusions that can be drawn. With nominal scale measurements, for example, the only available measure of central tendency is the mode. Also, ratio-level measurement is the only level that allows meaningful statements about ratios of scores. One cannot say that someone with an IQ of 140 is twice as intelligent as someone with an IQ of 70 because IQ is an interval scale measurement, but one can say that someone with six siblings has twice as many siblings as someone with three because number of siblings is measured at the ratio level.

Again, measurement involves assigning scores to individuals so that they represent some characteristic of the individuals. But how do researchers know that the scores actually represent the target characteristic, especially when it is a construct like intelligence, self-esteem, depression, or working memory capacity? The answer is that they conduct research using the measure to confirm that the scores make sense based on their understanding of the construct being measured. This is an extremely important point. Psychologists do not simply assume that their measures work. Instead, they collect data to demonstrate that they work. If their research does not demonstrate that a measure works as intended, they may stop using it or may work to improve it.

As an informal example, imagine that you have been dieting for a month. Your clothes seem to be fitting more loosely, and several friends have asked if you have lost weight. If at this point your bathroom scale indicated that you had lost 10 pounds, this would make sense and you would continue to use the scale. But if it indicated that you had gained 10 pounds, you would rightly conclude that it was broken and either fix it or get rid of it. In evaluating a measurement method, psychologists consider two general dimensions: reliability and validity. We will go into these two dimensions in depth in the next sections. In general, reliability is about whether the measurement is free from error, and behaves consistently. Validity is about what the measure means (does your measure actually measure what you want it to).

Reliability refers to the consistency of a measure. Psychologists consider three types of consistency: reliability over time (test-retest reliability), reliability across items (internal consistency), and reliability across different researchers (inter-rater reliability).

When researchers measure a construct that they assume to be consistent across time, they expect the scores they obtain to also be consistent across time. Test-retest reliability captures the extent to which this is actually the case. For example, intelligence is generally thought to be consistent across time. A person who is highly intelligent today should also be highly intelligent next week. This means that any reliable measure of intelligence should produce roughly the same scores for this individual next week as it does today. Clearly, a measure that produces highly inconsistent scores over time cannot be a very good measure of a construct that is supposed to be consistent.

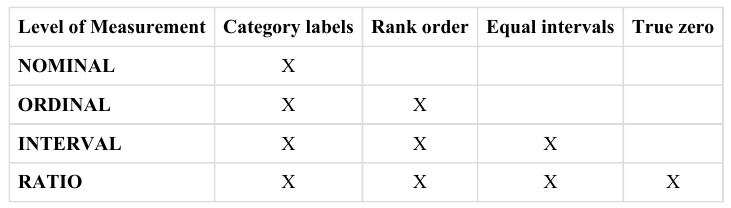

Assessing test-retest reliability requires using the measure on a group of people at one time, using it again on the same group of people at a later time, and then looking at test-retest correlation between the two sets of scores. This is typically done by graphing the data in a scatterplot and computing Pearson’s \(r\) . Figure 3.3 shows the correlation between two sets of scores of several university students on the Rosenberg Self-Esteem Scale, administered two times, a week apart. Pearson’s \(r\) for these data is +.95. In general, a test-retest correlation of +.80 or greater is considered to indicate good reliability, though it very much depends on the construct and the application.

Fig. 3.3 Test-retest correlation between two sets of scores of several college students on the rosenberg self-esteem scale, given two times a week apart ¶

Again, high test-retest correlations make sense when the construct being measured is assumed to be consistent over time, which is the case for intelligence, self-esteem, and the Big Five personality dimensions. But other constructs are not assumed to be stable over time. The very nature of mood, for example, is that it changes. So a measure of mood that produced a low test-retest correlation over a period of a month would not be a cause for concern.

A second kind of reliability is internal consistency, which is the consistency of people’s responses across the items on a multiple-item measure. In general, all the items on such measures are supposed to reflect the same underlying construct, so people’s scores on those items should be related to each other. For example, on the Rosenberg Self-Esteem Scale, people who agree that they are a person of worth should tend to agree that that they have a number of good qualities. If people’s responses to the different items are not related to each other, then it would no longer make sense to claim that they are all measuring the same underlying construct. This is as true for behavioral and physiological measures as it is for self-report measures. For example, a researcher might operationalize risk seeking by having people make a series of bets in a simulated game of roulette. This measure would be internally consistent to the extent that individual participants’ bets were consistently high or low across trials.

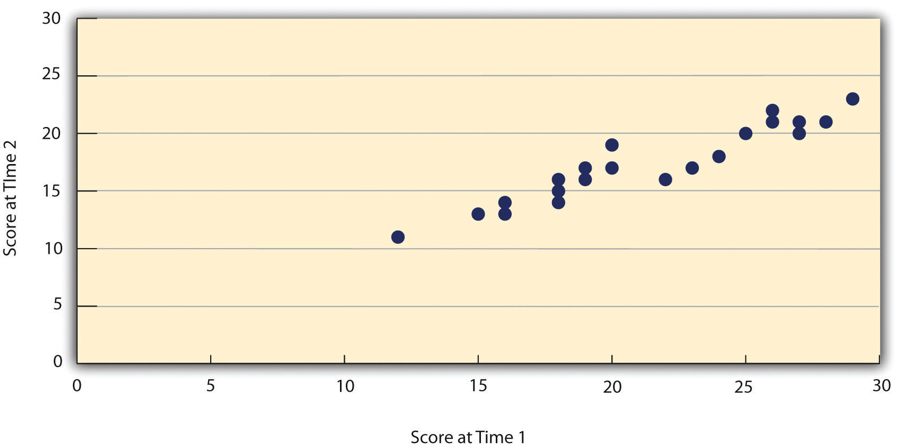

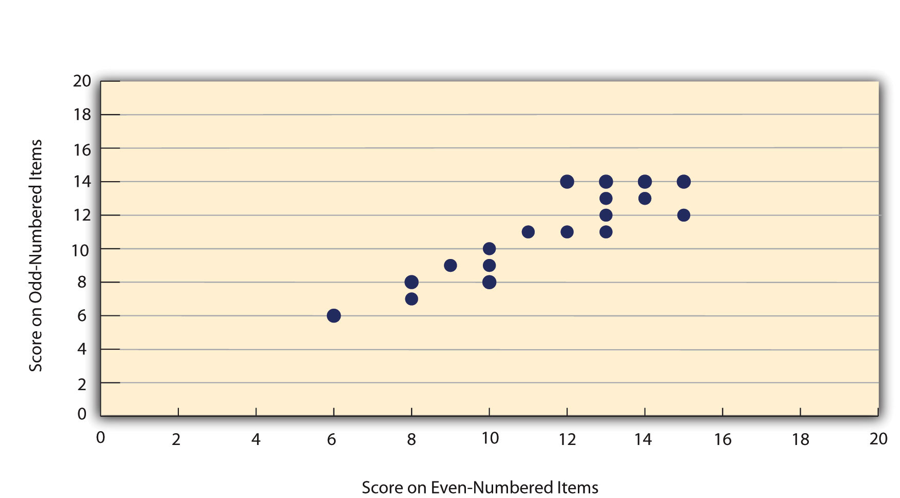

Like test-retest reliability, internal consistency can only be assessed by collecting and analyzing data. One approach is to look at a split-half reliability. This involves splitting the items into two sets, such as the first and second halves of a test or the even-numbered and odd-numbered items. Then each set of items is used to compute a separate score, and the relationship between the two sets of scores is examined. For example, Figure 3.4 shows the correlation between several university students’ scores on the even-numbered items and their scores on the odd-numbered items of the Rosenberg Self-Esteem Scale. Pearson’s \(r\) for these data is +.88. A split-half correlation of +.80 or greater is generally considered good internal consistency (though, again, it greatly depends on the details of the measures).

Fig. 3.4 Correlation between several college students’ scores on the even-numbered items and their scores on the odd-numbered items of the Rosenberg Self-Esteem Scale ¶

Perhaps the most common measure of internal consistency used by researchers in psychology is a statistic called Cronbach’s \(\alpha\) (the Greek letter alpha). Conceptually, \(\alpha\) is the mean of all possible split-half correlations for a set of items. For example, there are 252 ways to split a set of 10 items into two sets of five. Cronbach’s \(\alpha\) would be the mean of the 252 split-half correlations. Note that this is not how \(\alpha\) is actually computed, but it is a correct way of interpreting the meaning of this statistic. Again, a value of +.80 or greater is generally taken to indicate good internal consistency.

Many behavioral measures involve judgment on the part of an rater or observer. For example, a researcher interested in measuring students’ social skills could make video recordings as students interacted with each other when meeting for the first time. The researcher could then have a rater watch the videos and provide a quantitative judgment about each student’s social skills. Should we believe that social skills that can be reliably detected by an attentive observer?

In situations like this, we will probably calculate inter-rater reliability, which quantifies the extent to which different observers are consistent in their judgments. A researcher interested in social skills could have two or more observers watch and rate the social skills demonstrated in the videos. To the extent that social skills are being evaluated reliably, we should expect that the ratings from our different observers should be highly correlated with each other. Inter-rater reliability would also have been measured in Bandura’s Bobo doll study. In that case, the observers’ ratings of how many acts of aggression a particular child committed while playing with the Bobo doll should have been highly correlated. Interrater reliability is often assessed using Cronbach’s \(\alpha\) when the judgments are quantitative (ordinal, interval, or ratio) or an analogous statistic called Cohen’s \(\kappa\) (the Greek letter kappa) when they are nominal.

Validity is the extent to which the scores from a measure represent the construct they are intended to represent. But how do researchers determine this? We have already considered one factor that they take into account - reliability. When a measure has good test-retest reliability and internal consistency, researchers should be more confident that the scores represent what they are supposed to. There has to be more to it, however, because a measure can be extremely reliable but have no validity whatsoever. As an absurd example, imagine someone who believes that people’s index finger length reflects their self-esteem and therefore tries to measure self-esteem by holding a ruler up to people’s index fingers. Although this measure would have extremely good test-retest reliability, it would have absolutely no validity. The fact that one person’s index finger is a centimeter longer than another’s would indicate nothing about which one had higher self-esteem.

Discussions of validity usually divide it into several distinct “types”. But a good way to interpret these types is that they are other kinds of evidence - in addition to reliability - that should be taken into account when judging how good a measure is. Here we consider three basic kinds of validity: face validity, content validity, and criterion validity.

Face validity is the extent to which a measurement method appears “on its face” to measure the construct of interest. Most people would expect a self-esteem questionnaire to include items about whether they see themselves as a person of worth and whether they think they have good qualities. So a questionnaire that included these kinds of items would have good face validity. The finger-length method of measuring self-esteem, on the other hand, seems to have nothing to do with self-esteem and therefore has poor face validity. Although face validity can be assessed quantitatively - for example, by having a large sample of people rate a measure in terms of whether it appears to measure what it is intended to - it is usually assessed informally.

Face validity is at best a very weak kind of evidence that a measurement method is measuring what it is supposed to. One reason is that it is based on people’s intuitions about human behavior, which are frequently wrong. It is also the case that many established measures in psychology work quite well despite lacking face validity. The Minnesota Multiphasic Personality Inventory-2 (MMPI-2) measures many personality characteristics and disorders by having people decide whether each of over 567 different statements applies to them - where many of the statements do not have any obvious relationship to the construct that they measure. For example, the items “I enjoy detective or mystery stories” and “The sight of blood doesn’t frighten me or make me sick” both measure the suppression of aggression. In this case, it is not the participants’ literal answers to these questions that are of interest, but rather whether the pattern of the participants’ responses to a series of questions matches those of individuals who tend to suppress their aggression.

Content validity is the extent to which a measure “covers” the construct of interest. For example, if a researcher conceptually defines test anxiety as involving both sympathetic nervous system activation (leading to nervous feelings) and negative thoughts, then his measure of test anxiety should include items about both nervous feelings and negative thoughts. Or consider that attitudes are usually defined as involving thoughts, feelings, and actions toward something. According to this conceptual definition, a person has a positive attitude toward exercise to the extent that he or she thinks positive thoughts about exercising, feels good about exercising, and actually exercises. So to have good content validity, a measure of people’s attitudes toward exercise would have to reflect all three of these aspects. Like face validity, content validity is not usually assessed quantitatively. Instead, it is assessed by carefully checking the measurement method against the conceptual definition of the construct.

Criterion validity is the extent to which people’s scores on a measure are related to other variables (known as criteria) that one would expect valid scores to be correlated with. For example, people’s scores on a new measure of test anxiety should be negatively correlated with student’s performance on an important exam. If it were found that people’s scores were in fact negatively correlated with their exam performance, then this would be a piece of evidence that these scores really represent people’s test anxiety. But if it were found that people scored equally well on the exam regardless of their test anxiety scores, then this would cast doubt on the validity of the measure.

A criterion can be any variable that one has reason to think should be related with the construct being measured, and there will usually be many of them. For example, one would expect test anxiety scores to be negatively correlated with exam performance and course grades and positively correlated with general anxiety and with blood pressure during an exam. Or imagine that a researcher develops a new measure of physical risk taking. People’s scores on this measure should be related to their participation in “extreme” activities such as rock climbing and skydiving, the number of speeding tickets they have received, and even the number of broken bones they have had over the years. When the criterion is measured at the same time as the construct, criterion validity is referred to as concurrent validity; however, when the criterion is measured at some point in the future (after the construct has been measured), it is referred to as predictive validity (because scores on the measure have “predicted” a future outcome).

Criteria can also include other measures of the same construct. For example, one would expect new measures of test anxiety or physical risk taking to be positively correlated with existing measures of the same constructs. This is known as convergent validity.

Assessing convergent validity requires collecting data using the measure. Researchers John Cacioppo and Richard Petty did this when they created their self-report Need for Cognition Scale to measure how much people value and engage in thinking [CP82]. In a series of studies, they showed that people’s scores were positively correlated with their scores on a standardized academic achievement test, and that their scores were negatively correlated with their scores on a measure of dogmatism (which represents a tendency toward obedience). In the years since it was created, the Need for Cognition Scale has been used in literally hundreds of studies and has been shown to be correlated with a wide variety of other variables, including the effectiveness of an advertisement, interest in politics, and juror decisions [PBrinolLM09].

Discriminant validity, on the other hand, is the extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct. For example, self-esteem is defined as a general attitude toward the self that is fairly stable over time. It is not the same as mood, which is how good or bad one happens to be feeling right now. So people’s scores on a new measure of self-esteem should not be very highly correlated with measures of mood. If the new measure of self-esteem were highly correlated with a measure of mood, it could be argued that the new measure is not really measuring self-esteem, but instead is measuring mood.

When they created the Need for Cognition Scale, Cacioppo and Petty also provided evidence of discriminant validity by showing that people’s scores were not correlated with certain other variables. For example, they found only a weak correlation between people’s need for cognition and a measure of their cognitive style - the extent to which they tend to think analytically by breaking ideas into smaller parts or holistically in terms of “the big picture”. They also found no correlation between people’s need for cognition and measures of their test anxiety or their tendency to respond in socially desirable ways. All these low correlations provide evidence that the measure is reflecting a conceptually distinct construct.

So far in this chapter, we have considered several basic ideas about the nature of psychological constructs and their measurement. But now imagine that you are in the position of actually having to measure a psychological construct for a research project. How should you proceed? Broadly speaking, there are four steps in the measurement process: (a) conceptually defining the construct, (b) operationally defining the construct, (c) implementing the measure, and (d) evaluating the measure. In this section, we will look at each of these steps in turn.

Having a clear and complete conceptual definition of a construct is a prerequisite for good measurement. For one thing, it allows you to make sound decisions about exactly how to measure the construct. If you had only a vague idea that you wanted to measure people’s “memory”, for example, you would have no way to choose whether you should have them remember a list of vocabulary words, a set of photographs, a newly learned skill, or an experience from long ago. Because psychologists now conceptualize memory as a set of semi-independent systems, you would have to be more precise about what you mean by “memory”. If you are interested in long-term semantic memory (memory for facts), then having participants remember a list of words that they learned last week would make sense, but having them remember and execute a newly learned skill would not. In general, there is no substitute for reading the research literature on a construct and paying close attention to how others have defined it.

It is often a good idea to use an existing measure that has been used successfully in previous research. Among the advantages are that (a) you save the time and trouble of creating your own, (b) there is already some evidence that the measure is reliable and valid (if it has been used successfully), and (c) your results can more easily be compared with and combined with previous results. In fact, if there already exists a reliable and valid measure of a construct, other researchers might expect you to use it unless you have a good and clearly stated reason for not doing so.

If you choose to use an existing measure, you may still have to choose among several alternatives. You might choose the most common one, the one with the best evidence of reliability and validity, the one that best measures a particular aspect of a construct that you are interested in (e.g., a physiological measure of stress if you are most interested in its underlying physiology), or even the one that would be easiest to use. For example, the Ten-Item Personality Inventory (TIPI) is a self-report questionnaire that measures all the Big Five personality dimensions with just 10 items [GRS03]. It is not as reliable or valid as longer and more comprehensive measures, but a researcher might choose to use it when testing time is severely limited.

When an existing measure was created primarily for use in scientific research, it is usually described in detail in a published research article and is free to use in your own research - with a proper citation. Later, you might find that researchers who use the same measure describe it only briefly but provide a reference to the original article, in which case you would have to get the details from the original article. The American Psychological Association also publishes the Directory of Unpublished Experimental Measures, which is an extensive catalog of measures that have been used in previous research. Many existing measures - especially those that have applications in clinical psychology - are proprietary. This means that a publisher owns the rights to them and that you would have to purchase them. These include many standard intelligence tests, the Beck Depression Inventory, and the Minnesota Multiphasic Personality Inventory (MMPI). Details about many of these measures and how to obtain them can be found in other reference books, including Tests in Print and the Mental Measurements Yearbook. There is a good chance you can find these reference books in your university library.

Instead of using an existing measure, you might want to create your own. Perhaps there is no existing measure of the construct you are interested in or existing ones are too difficult or time-consuming to use. Or perhaps you want to use a new measure specifically to see whether it works in the same way as existing measures - that is, to evaluate convergent validity. In this section, we consider some general issues in creating new measures that apply equally to self-report, behavioral, and physiological measures. More detailed guidelines for creating self-report measures are presented in the chapter found here .

First, be aware that most new measures in psychology are really variations of existing measures, so you should still look to the research literature for ideas. Perhaps you can modify an existing questionnaire, create a paper-and-pencil version of a measure that is normally computerized (or vice versa), or adapt a measure that has traditionally been used for another purpose. For example, the famous Stroop task [Str35] - in which people quickly name the colors that various color words are printed in— - has been adapted for the study of social anxiety. Socially anxious people are slower at color naming when the words have negative social connotations such as “stupid” [AFF02].

When you create a new measure, you should strive for simplicity. Remember that your participants are not as interested in your research as you are and that they will vary widely in their ability to understand and carry out whatever task you give them. You should create a set of clear instructions using simple language that you can present in writing or read aloud (or both). It is also a good idea to include one or more practice items so that participants can become familiar with the task, and to build in an opportunity for them to ask questions before continuing. It is also best to keep the measure brief to avoid boring or frustrating your participants to the point that their responses start to become less reliable and valid.

The need for brevity, however, needs to be weighed against the fact that it is nearly always better for a measure to include multiple items rather than a single item. There are two reasons for this. One is a matter of content validity. Multiple items are often required to cover a construct adequately. The other is a matter of reliability. People’s responses to single items can be influenced by all sorts of irrelevant factors - misunderstanding the particular item, a momentary distraction, or a simple error such as checking the wrong response option. But when several responses are summed or averaged, the effects of these irrelevant factors tend to cancel each other out to produce more reliable scores. Remember, however, that multiple items must be structured in a way that allows them to be combined into a single overall score by summing or averaging. To measure “financial responsibility”, a student might ask people about their annual income, obtain their credit score, and have them rate how “thrifty” they are - but there is no obvious way to combine these responses into an overall score. To create a true multiple-item measure, the student might instead ask people to rate the degree to which 10 statements about financial responsibility describe them on the same five-point scale.

Finally, the very best way to assure yourself that your measure has clear instructions, includes sufficient practice, and is an appropriate length is to test several people (family and friends often serve this purpose nicely). Observe them as they complete the task, time them, and ask them afterward to comment on how easy or difficult it was, whether the instructions were clear, and anything else you might be wondering about. Obviously, it is better to discover problems with a measure before beginning any large-scale data collection.

You will want to implement any measure in a way that maximizes its reliability and validity. In most cases, it is best to test everyone under similar conditions that, ideally, are quiet and free of distractions. Testing participants in groups is often done because it is efficient, but be aware that it can create distractions that reduce the reliability and validity of the measure. As always, it is good to use previous research as a guide. If others have successfully tested people in groups using a particular measure, then you should consider doing it too.

Be aware also that people can react in a variety of ways to being measured, and these reactions can reduce the reliability and validity of the scores. Although some disagreeable participants might intentionally respond in ways meant to disrupt a study, participant reactivity is more likely to take the opposite form. Agreeable participants might respond in ways they believe they are expected to. They might engage in socially desirable responding. For example, people with low self-esteem agree that they feel they are a person of worth not because they really feel this way but because they believe this is the socially appropriate response and do not want to look bad in the eyes of the researcher. Additionally, research studies can have built-in demand characteristics: subtle cues that reveal how the researcher expects participants to behave. For example, a participant whose attitude toward exercise is measured immediately after she is asked to read a passage about the dangers of heart disease might reasonably conclude that the passage was meant to improve her attitude. As a result, she might respond more favorably because she believes she is expected to by the researcher. Finally, your own expectations can bias participants’ behaviors in unintended ways.

There are several precautions you can take to minimize these kinds of reactivity. One is to make the procedure as clear and brief as possible so that participants are not tempted to vent their frustrations on your results. Another is to make clear to participants that you will do everything you can to ensure’ their anonymity. If you are testing them in groups, be sure that they are seated far enough apart that they cannot see each other’s responses. Give them all the same type of writing implement so that they cannot be identified by, for example, the pink glitter pen that they used. You can even allow them to seal completed questionnaires into individual envelopes or put them into a drop box where they immediately become mixed with others’ questionnaires. Although informed consent requires telling participants what they will be doing, it does not require revealing your hypothesis or other information that might suggest to participants how you expect them to respond. A questionnaire designed to measure financial responsibility need not be titled “Are You Financially Responsible?” It could be titled “Money Questionnaire” or have no title at all. Finally, the effects of your expectations can be minimized by arranging to have the measure administered by a helper who is “blind” or unaware of its intent or of any hypothesis being tested. Regardless of whether this is possible, you should standardize all interactions between researchers and participants - for example, by always reading the same set of instructions word for word.

Once you have used your measure on a sample of people and have a set of scores, you are in a position to evaluate it more thoroughly in terms of reliability and validity. Even if the measure has been used extensively by other researchers and has already shown evidence of reliability and validity, you should not assume that it worked as expected for your particular sample and under your particular testing conditions. Regardless, you now have additional evidence bearing on the reliability and validity of the measure, and it would make sense to add that evidence to the research literature.

In most research designs, it is not possible to assess test-retest reliability because participants are tested at only one time. For a new measure, you might design a study specifically to assess its test-retest reliability by testing the same set of participants at two separate times. In other cases, a study designed to answer a different question still allows for the assessment of test-retest reliability. For example, a psychology instructor might measure his students’ attitudes toward critical thinking using the same measure at the beginning and end of the semester to see if there is any change. Even if there is no change, he could still look at the correlation between students’ scores at the two times to assess the measure’s test-retest reliability. It is also customary to assess internal consistency for any multiple-item measure - usually by looking at a split-half correlation or Cronbach’s \(\alpha\) .

Convergent and discriminant validity can be assessed in various ways. For example, if your study included more than one measure of the same construct or measures of conceptually distinct constructs, then you should look at the correlations among these measures to be sure that they fit your expectations. Note also that a successful experimental manipulation also provides evidence of criterion validity. Recall that MacDonald and Martineau manipulated participant’s moods by having them think either positive or negative thoughts, and after the manipulation their mood measure showed a distinct difference between the two groups. This simultaneously provided evidence that their mood manipulation worked and that their mood measure was valid.

But what if your newly collected data cast doubt on the reliability or validity of your measure? The short answer is that you have to ask why. It could be that there is something wrong with your measure or how you administered it. It could be that there is something wrong with your conceptual definition. It could be that your experimental manipulation failed. For example, if a mood measure showed no difference between people whom you instructed to think positive versus negative thoughts, maybe it is because the participants did not actually think the thoughts they were supposed to or that the thoughts did not actually affect their moods. In short, it is “back to the drawing board” to revise the measure, revise the conceptual definition, or try a new manipulation.